Private AI Copilot in a Box – Powered by NVIDIA DGX Spark

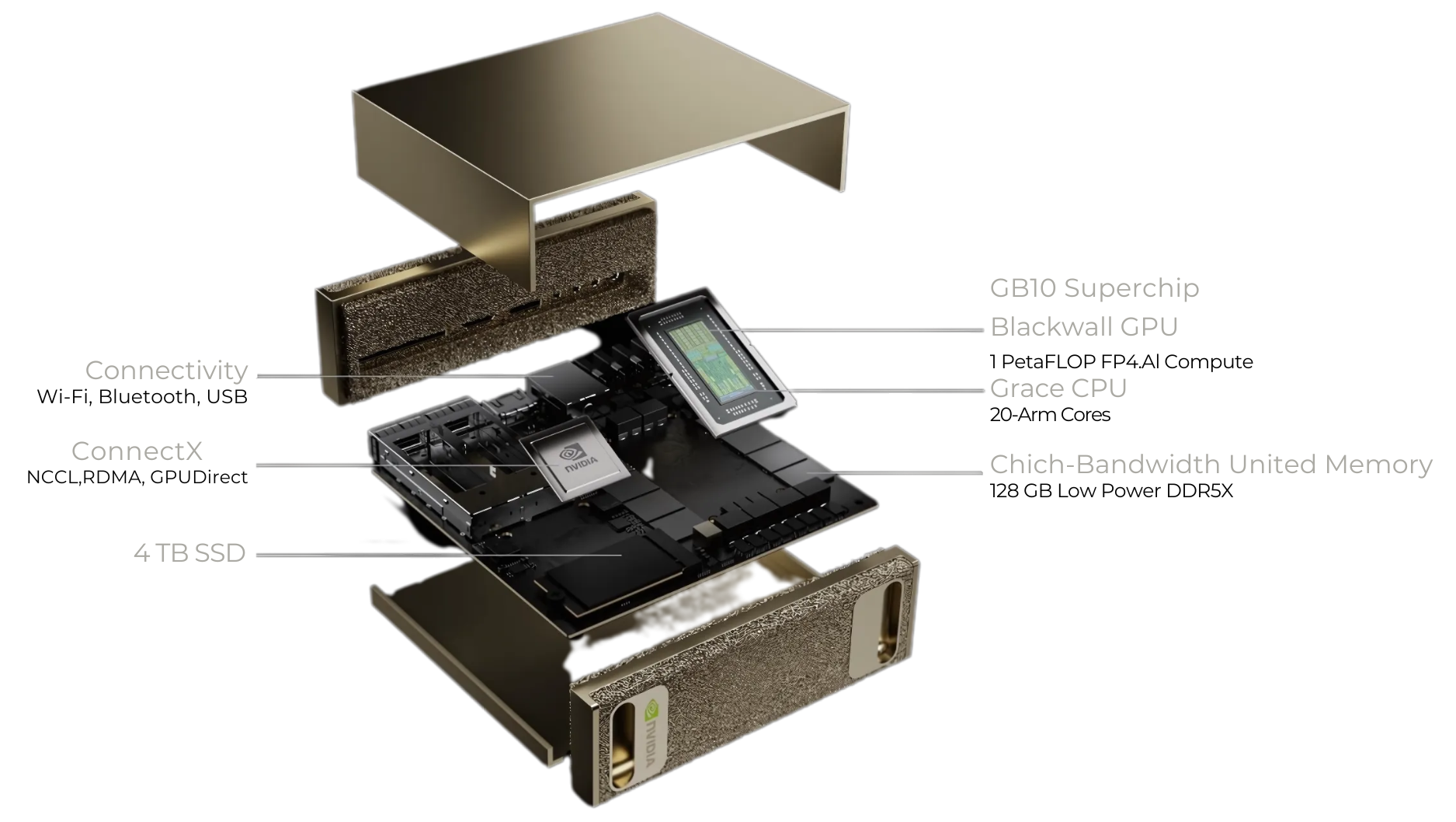

DGX Spark is a petaflop-class NVIDIA system that runs your own copilots, RAG, and AI agents on your data, inside your perimeter. Fast to deploy, easy to scale into a full AI Factory.

Run advanced open models and RAG locally

Keep data fully sovereign and compliant

Scale from 1 box to DGX / OVX and full AI Factories

DGX Spark is a compact NVIDIA system built on the same architecture as DGX, OVX, and DGX Cloud. It brings enterprise-grade AI capability into a desktop-sized form factor, ideal for pilots, labs, and secure environments.

Build, test, and refine AI projects in days. Extremely portable with a small footprint (≈15×15×5 cm, 1.2 kg), carry it between offices or countries, place it on a boardroom table, or install in any office space.

Run AI anywhere with just electricity—no internet required for inference once models and data are downloaded. Perfect for secure facilities, remote branches, and temporary project sites.

Clear upgrade path with the same software concepts as DGX/OVX/Cloud—containers, APIs, and model serving frameworks. Whatever works on Spark can be promoted to DGX/OVX clusters or mirrored in DGX Cloud.

ConnectX networking enables linking multiple Sparks or connecting to broader DGX/OVX environments. Spark isn't a dead-end gadget—it's how you start your AI factory.

All compute and data stays inside your perimeter with full offline capability.

Easier compliance conversations with:

One-time CapEx for years of usage with no per-hour cloud GPU billing or surprise costs from usage spikes. Dedicated hardware means consistent, reliable performance that never varies—unlike shared cloud instances where performance can degrade with multi-tenancy. Total cost and performance predictability for budget and project planning.

DGX Spark delivers immediate value across diverse AI applications

Deploy secure, internal copilots for HR, compliance, or operations. Keep sensitive knowledge within your organization.

Automate extraction, tagging, and summarization of confidential reports or forms without cloud exposure.

Analyze sensor and camera feeds locally, summarize insights with LLMs at the edge for real-time decision making.

Run coordinated agents for reporting, scheduling, or data-driven automation. Orchestrate complex AI workflows.

Accelerate existing analytics, training, and feature engineering using GPU-optimized frameworks for faster insights.

Total Control

Complete control over data and cost with no cloud dependencies

Predictable Pricing

No per-hour GPU billing or surprise costs from usage spikes

Consistent Performance

No shared tenancy or performance variability

128 GB Unified Memory

Handles large models effortlessly without memory constraints

Ready to Run

Ships with DGX OS and NVIDIA AI Enterprise pre-configured

Enterprise Networking

ConnectX networking enables multi-Spark or cluster expansion

Ideal Entry Point

Perfect for labs and small teams before scaling up

Seamless Upgrade Path

Direct migration to full-scale DGX/OVX clusters when ready

Same Software Stack

Use identical tools throughout your AI journey

Start small and scale seamlessly with a unified architecture

Run pilots and prototypes. Validate AI use cases with real data in a secure environment.

Scale workloads and teams. Add capacity by connecting multiple Spark systems together.

Move to production-grade AI Factory. Deploy enterprise-scale infrastructure with proven ROI.

Extend capacity securely when needed. Burst to cloud while maintaining on-prem control.

Identify high-value opportunities aligned with your business goals. We work with your team to prioritize AI use cases that can deliver impact quickly.

Since Spark arrives pre-configured, our focus is on enabling your team: connecting data sources, deploying your chosen copilots or frameworks, and ensuring security and governance are properly aligned.

Deploy multiple AI use cases across different functions using your own data — from copilots and document intelligence to analytics or automation. Demonstrate measurable business value and establish a repeatable framework for scaling across the enterprise.

Define the path from pilot to full AI Factory. Plan expansion to DGX or OVX clusters, including performance benchmarking, ROI modeling, and training for internal teams.

Yes, fully air-gapped once data and models are downloaded. This makes it perfect for secure environments, classified networks, or locations with limited connectivity. All AI processing happens locally on the device.

It's ideal for small AI teams or pilot labs. Think of it as your AI experimentation platform for 5-15 users. For enterprise-wide deployments, you'll want to scale to DGX or OVX clusters, but everything you build on Spark will transfer seamlessly.

Everything you build on Spark runs on DGX or OVX. The same software stack, tools, and workflows transfer directly. You can connect multiple Sparks together, or when ready, migrate to full DGX BasePOD or SuperPOD configurations without rewriting code.

DGX Spark can run popular open models including Llama, Mistral, Falcon, and domain-specific models. With 128GB of unified memory, you can run large language models up to 200B parameters and handle complex multi-modal AI workloads.

DGX Spark is the fastest, safest way to make AI real with your data and your rules — a single box that grows with your ambition.